Automate your workflow with Scry AI Solutions

Get in TouchOver the past decade, the field of artificial intelligence (AI) has seen striking developments. As surveyed in

[141], there now exist over twenty domains in which AI programs are performing at least as well as (if not

better than) humans. These advances have led to a massive burst of excitement in AI that is highly reminiscent

of the one that took place during the 1956-1973 boom phase of the first AI hype cycle [56]. Investors are

funding billions of dollars in AI-based research and startups [143,144,145], and futurists are again beginning to

make alarming predictions about the incipience of powerful AI [149,150, 151,152]. Many have questioned the

future of humans in the job market, claiming that up to 47% of United States jobs are in the high-risk category

of being lost to automation by 2033 [146], and some have gone as far as to say that AI could spell the end of

humanity [141].

In this article we argue that the short-term effects of AI are unlikely to be as pronounced as these claims

suggest. Several of the obstacles that led to the demise of the first AI boom phase over forty years ago remain

unresolved today, and it seems that serious theoretical advances will be required to overcome them.

Moreover, the present infrastructure is ill-adapted to incorporating AI programs on a large scale, meaning that

it is improbable that AI systems will be able to soon replace humans en masse. Therefore, the predictions

mentioned above are unlikely to be met in the next fifteen years, and financiers may not receive an expected

return on their recent investments in AI.

The recent hype in AI has manifested itself in two forms: striking predictions and massive investments, both of which are discussed below.

Over the past several years, there has been a growing belief that AI is a limitless, mystical force that it is (or will

soon be) able to supersede humans and solve any problem. For instance, Ray Kurzweil predicted, “artificial

intelligence will reach human levels by around 2029,” [150], and Gray Scott stated, “there is no reason and no

way that a human mind can keep up with an artificial intelligence machine by 2035.” [152]. An analogous but

more ominous sentiment was expressed by Elon Musk, who wrote, “The pace of progress in artificial

intelligence . . . is incredibly fast . . . The risk of something seriously dangerous happening is in the five-year

timeframe. 10 years at most,” [149] and later said, “with artificial intelligence we’re summoning the demon”

[152].

Although some consider Musk’s vision extreme, there is still a significant worry among researchers that strong

AI will soon have significant consequences for humanity’s future in the job market. For instance, in 2013 two

Oxford professors, Frey and Osborne, published an article [146] titled, “The Future of Employment: How

Susceptible are Jobs to Computerization?,” in which they attempted to analyze the proportion of the job market

that could become computerized within the next twenty years. They estimated that, “47% of total US

employment is in the high-risk category, meaning that associated occupations are potentially automatable over

some unspecified number of years, perhaps in a decade or two.”

Following that work, several papers have been written by various consulting firms and think tanks predicting

that between 20 and 40 percent of jobs will be lost within the next twenty years due to automation [147,148].

Such predictions have sent ripples through the boardrooms of many businesses and governing bodies of

various nations. Since AI systems are expected to reduce labor costs in the U.S. by a factor of ten, such

predictions suggest that businesses could become significantly more profitable by employing AI programs

instead of humans; however, this could force unemployment rates to reach staggering proportions, causing

enormous economic disruption worldwide.

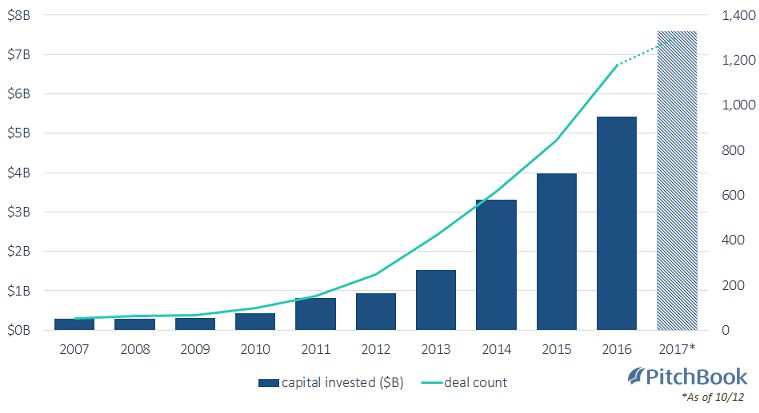

According to McKinsey and Company, non-tech companies spent between $26 billion and $39 billion on AI in

2016, and tech companies spent between $20 billion and $30 billion on AI [143]. Along a similar vein, an

explosion of AI-based startups began around 2012 and continues today. In December 2017, AngelList (a

website that connects startups with angel investors and job seekers) listed 3,792 AI startups; 2,592 associated

angel investors; and 2,521 associated job vacancies. According to Pitchbook, $285 million was invested by

venture capitalists in AI startups in 2007, $4 billion in 2015, $5.4 billion in 2016, and $7.6 billion between

January 2017 and October 2017; the total venture capitalist investment in AI between 2007 and 2017 exceeds

$25 billion [144]. According to CBInsights, 658 AI startups obtained funding in 2016 alone and are actively

pursuing their business plans [145]. In fact, to obtain venture funding today, most new business plans need to

have at least some mention of AI.

The current hype in AI is immensely reminiscent of what took place during the boom phase of the first AI hype

cycle between 1956 and 1973 (surveyed in [56], the first article of this series). Indeed, following several

prominent advances in AI (such as the first self-learning program, which played checkers, and the introduction

of the neural network [5,14]), government agencies and research organizations were quick to invest massive

funds in AI research [40,41,42]. Fueled by this popularity, AI researchers were also quick to make audacious

predictions about the incipience of powerful AI. For instance, in 1961, Marvin Minsky wrote, “within our lifetime

machines may surpass us in general intelligence” [9].

However, this euphoria was short-lived. By the early 1970s, when the expectations in AI did not come to pass,

disillusioned investors withdrew their funding; this resulted in the AI bust phase when research in AI was slow

and even the term, “artificial intelligence,” was spurned.

In retrospect, the demise of the AI boom phase can be attributed to the following two major obstacles:

Computing power in the 1970s was very costly and not powerful enough to imitate the human brain. For

instance, creating an artificial neural network (ANN) the size of a human brain would have consumed the entire

U.S. GDP in 1974 [56].

Scientists did not understand how the human brain functioned and remained especially unaware of the

neurological mechanisms behind creativity, reasoning and humor. The lack of an understanding as to what

precisely machine learning programs should be trying to imitate posed a significant issue in moving the theory

of AI forward [38]. As succinctly explained by MIT professor Hubert Dreyfus, “the programs lacked the intuitive

common sense of a four-year old,” and no one knew how to proceed [154].

The first difficulty mentioned above can be classified as mechanical, whereas the second as conceptual; both

were responsible for the end the first AI boom phase forty years ago. By 1982, Minsky himself had overturned

his previously optimistic viewpoint saying, “I think the Al problem is one of the hardest science has ever

undertaken” [155].

Clearly, while beating humans in the game of Jeopardy! what IBM Watson did in 2011 was no ordinary feat;

however, it only provided factoids as answers to various questions in Jeopardy! Some subsequent statements

and advertisements regarding IBM Watson implied that it could help in solving some of the harder problems

concerning humanity (e.g., helping in cancer research and finding alternate therapies); however, the ensuing

work with M. D. Anderson Cancer Center and other hospitals showed that it fell far short of such expectations

[156, 157]. In fact, none of AI systems today are even close to being HAL 9000, an artificially intelligent

computer that was portrayed as an antagonist in the movie, 2001: A Space Odyssey.

What essentially happened over the past forty years was substantial progress towards the first (mechanical)

issue mentioned above. The facilitating principle here has been Moore’s law, which predicts that the number of

transistors in an electronic circuit should approximately double every two years; as a result, the cost and speed

of computing have improved by a factor of 1.1 million since the 1970s [114,115,117]. This led to ubiquitous and

inexpensive hardware and connectivity, allowing for some of the theoretical advances invented decades ago in

the first hype cycle (e.g., backpropagation algorithms) and some new techniques to perform significantly better

in practice, largely through tedious application of heuristics and engineering skills.

However, what remains lacking is a conceptual breakthrough that provides insight towards the second issue

highlighted above: we remain as unsure as ever as to how to create a machine that truly imitates intelligent life

and how to endow it with “intuition” or “common sense” or the ability to perform several tasks well (like

humans). This has resulted in several conspicuous deficiencies (detailed below) in even the most modern AI

programs, which render them impractical for large-scale use.

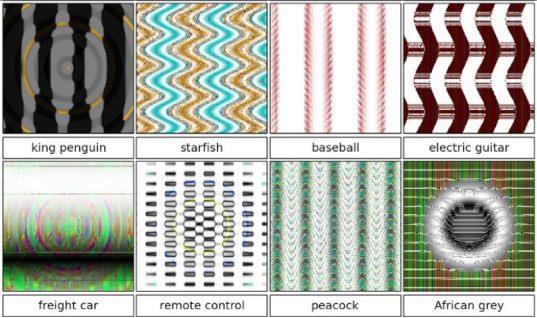

In 2015, Nguyen, Yosinki and Clune examined whether the leading image-recognizing, deep neural networks

were susceptible to false positives [158]. They generated random images by perturbing patterns, and they

showed both the original patterns and their mutated copies to these neural networks (which were trained

using labeled data from ImageNet). Although the perturbed patterns (eight of which are depicted in Figure 3

below) were essentially meaningless, they were incorrectly recognized by these networks with over 99%

confidence as a king penguin, starfish, etc. [158].

Another research group showed that, by wearing certain psychedelic spectacles, ordinary people could fool a

facial recognition system into thinking they were celebrities; this would permit people to impersonate each

other without being detected by such a system [159,160]. Similarly, researchers in 2017 added stickers to stop

signs, which caused an ANN to misclassify them, which could have grave consequences for autonomous car

driving [161]. Until these AI systems become robust enough to not be deceived by such perturbations, it will be

infeasible for industries to adopt them extensively [162].

Machine learning algorithms require several thousand to several million pictures of cats (and non-cats) before

they can start to accurately distinguish them. This could pose a significant issue for an AI system if it operates

in a situation that is substantially different from situations in the past (e.g., due to a stock market crash); since

the AI system may not have large enough data set to be adequately trained on, it may just fail.

Although deep learning algorithms sometimes produce superior results, they are usually “black boxes,” and

even researchers are currently unable to develop a theoretical framework for understanding how or why they

give the answers they do. For example, the Deep Patient program built by Dudley and his colleagues at Mt.

Sinai hospital can largely anticipate the onset of schizophrenia, but Dudley regretfully remarked, “we can build

these models, but we don’t know how they work” [109,141].

Since we do not understand the direct causal relationship between the data processed by an AI program and

its eventual answer, systematically improving AI programs remains a serious complication and is typically done

through trial and error. For the same reason, it is difficult to fix such solutions if something goes wrong

[163,171]. In view of these issues and the fact that humans are taught to “treat” causes (and not symptoms), it

is unlikely that many industries will be able to rely on deep learning programs, at least any time soon. For

instance, a doctor is not likely to administer a drug to a patient based solely on a program’s prediction that the

patient will become schizophrenic soon. Indeed, if the program’s prediction was inaccurate and the patient

suffers an illness due to a side effect of the inappropriately administered drug, the doctor could face censure,

with his or her only defense being that the trust on the AI system was misplaced.

As mentioned previously, Moore’s law, which predicts an exponential growth rate for the number of transistors

in a circuit, has been the most influential reason for our progress in AI. The size of today’s transistors can be

reduced by at most a factor of 4,900 before reaching the theoretical limit of one silicon atom. In 2015, Moore

himself said, “I see Moore’s law dying here in the next decade or so” [117]. Thus, it is unclear whether strong,

general-purpose AI systems will be developed before the demise of Moore’s law.

Even if the previously mentioned issues with AI systems are resolved, the following reasons indicate that a

significant amount of effort must still be exerted to adapt the infrastructure of companies, organizations and

governments to widely incorporate AI programs:

Although Frey and Osborne predicted that, “47% of total US employment is in the high-risk category, … perhaps

in a decade or two,” [146], research divisions of large technology companies, strategy companies and think

tanks have long had a history of underestimating – at least by a factor of two – how long it would take for

specific technological advances to affect human society. The reason is that these analyses often fail to address

a crucial point: the global economy is not frictionless — changes take time. Humans are quick to adapt to

modern technology if it helps them but are very resistant if it hurts them, and this phenomenon is hard to

quantify.

For example, over the past four decades, there has already been a significant opportunity to reduce labor costs

– by a factor of four – in high-wage countries by using outsourcing. Outsourcing of manufacturing jobs to lower

wage countries from the U. S. started around 1979, and yet the U. S. had cumulatively lost less than 8 million

manufacturing jobs due to outsourcing by 2016 [168]. Similarly, outsourcing of service jobs from the U.S.

began in 1990s, but the U.S. has cumulatively lost around 5 million such jobs. Hence, job losses due to

outsourcing have totaled around 13 million, or around 8% of the 161 million U.S. working population. If the

global economy were frictionless, most if not all 47% of the jobs predicted by Frey and Osborne would have

been lost to outsourcing. The fact that this did not happen during the last 20 to 40 years brings some doubt to

the claim that it will happen within the next fifteen years due to AI, especially in view of the above-mentioned

inadequacies of the modern infrastructure needed to incorporate AI programs.

Furthermore, unless there are major conceptual breakthroughs, the previously mentioned deficiencies of AI

programs also make significant job loss to automation unlikely within the next fifteen years. Indeed, AI systems

do very well in what they have learned but falter quickly if their rules are perturbed minutely. Humans can

easily exploit this fact if their jobs were at stake, e.g., if autonomous driving software were used, taxi drivers

could collude with others to introduce malicious software that causes accidents (or place stickers on stop signs,

as mentioned above). Similarly, ANNs can be defeated by attacking their defense with continuously mutating

malware [161,162]; miscreants can use this to smuggle false data into the ANN training sets, thereby disrupting

the learning process of the AI system. Although these activities would certainly be illegal if AI systems gained

wide usage, the risks and consequences of such illicit actions would likely be too great for largescale AI

incorporation to gain public support.

The last point worth mentioning is that additional jobs will be created over the next fifteen years, which were

not accounted for in the analyses of Frey and Osborne [146] or in any of the subsequent analyses [147,148]

Due to the reasons mentioned above, the adoption and implementation of AI systems are likely to be slower

than what investors have envisioned. It is therefore unclear whether financiers will reap the benefits of their AI

investments, which have totaled over $25 billion during the last ten years, especially since only 11 of the

funded private companies have a market valuation of $1 billion or more. In fact, out of 70 merger and

acquisition deals in AI since 2012, 75% percent sold below $50 million and were “acqui-hires” (companies

acquired for talent and not business performance); most of the companies financed by investors raised less

than $10 million [169]. It is possible, at least for now, that small funding in AI for a brief period might yield good

but not outstanding returns. However, this in deep contrast with the standard investing model, which

advocates for investors to invest more money and get 8-14 times their money back within 4-7 years.

The past several years have marked the beginning of a new hype cycle in AI. Recent developments in the field

(surveyed in [141]) have captured the interest of researchers and the public, who are beginning to make

alarming predictions about the incipience of powerful AI, and of financiers, who are beginning to expend

massive investments on AI research and startups. This is reminiscent of the first AI boom phase, which took

place over forty-five years ago. There too, the field of AI saw many striking advances, audacious predictions,

and massive investments. Eventually, that boom phase collapsed, primarily due to two reasons. The first was

mechanical, due to limited and costly computational power in the 1970s; the second was conceptual, due to a

lack of understanding of “intuition” and “human thought,” and how to make computers that could imitate

humans.

Largely due to Moore’s law, the first issue has been substantially resolved; the cost and power of hardware

have improved by a factor of over one million during the past forty years, allowing for ubiquitous and

affordable hardware that could be used to make better AI programs. The second issue, however, is still

unresolved. In addition, there are major deficiencies in even the most modern AI programs that remain

sensitive to perturbations, are inefficient learners and difficult to improve upon. Even if these issues were

resolved, the current infrastructures of businesses and governments seems ill-equipped to quickly incorporate

AI programs on a large scale. Hence, it is unlikely that the audacious expectations by researchers, investors,

and the public (regarding AI) will be realized in the next fifteen years.

While much of the excitement in AI has led to striking developments [141], much of it also appears to be based on “irrational exuberance” [52] rather than on facts. It seems that we still require significant breakthroughs

before AI systems can truly imitate intelligent life. As John McCarthy noted in 1977, creating a human-like AI

computer will require, “conceptual breakthroughs,” because, “what you want is 1.7 Einsteins and 0.3 of the

Manhattan Project, and you want the Einsteins first. I believe it’ll take 5 to 500 years” [43]. His statement seems

to be just as applicable now, over forty years later.

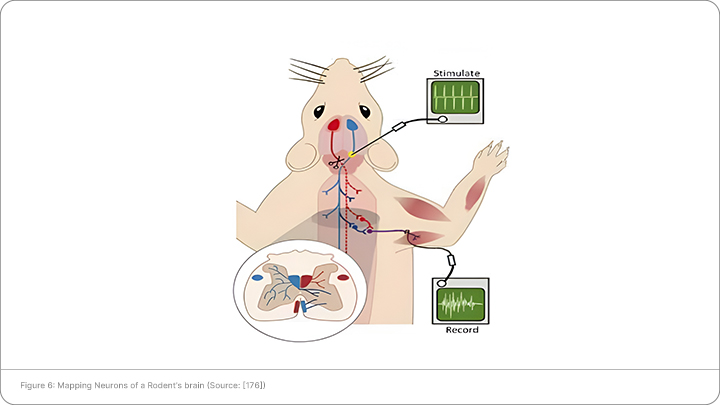

However, researchers are continuing to pioneer paths that might lead to such breakthroughs. For example,

since ANNs and reinforcement learning systems derive their inspiration from neuroscience, some academics

believe that new conceptual insights could require multidisciplinary research by combining biology, math and

computer science. In fact, the MICRoNS (Machine Intelligence from Cortical Networks) project is first attempt at

mapping a rodent’s brain, which has around 100,000 neurons and about a billion synapses. The U.S.

Government (via IARPA) has funded this hundred-million-dollar research initiative, and neuroscientists and

computers scientists from Harvard University, Princeton University, Baylor College of Medicine and Allen

Institute of Artificial Intelligence are collaborating to make it successful [170]. The computational aims for

MICrONS include learning the ability to perform complex information processing tasks such as one-shot

learning, unsupervised clustering, and scene parsing, with the eventual goal of achieving human-like

proficiency. If successful, this project may create the foundational blocks for the next generation of AI

systems.

At Scry Analytics Inc ("us", "we", "our" or the "Company") we value your privacy and the importance of safeguarding your data. This Privacy Policy (the "Policy") describes our privacy practices for the activities set out below. As per your rights, we inform you how we collect, store, access, and otherwise process information relating to individuals. In this Policy, personal data (“Personal Data”) refers to any information that on its own, or in combination with other available information, can identify an individual.

We are committed to protecting your privacy in accordance with the highest level of privacy regulation. As such, we follow the obligations under the below regulations:

This policy applies to the Scry Analytics, Inc. websites, domains, applications, services, and products.

This Policy does not apply to third-party applications, websites, products, services or platforms that may be accessed through (non-) links that we may provide to you. These sites are owned and operated independently from us, and they have their own separate privacy and data collection practices. Any Personal Data that you provide to these websites will be governed by the third-party’s own privacy policy. We cannot accept liability for the actions or policies of these independent sites, and we are not responsible for the content or privacy practices of such sites.

This Policy applies when you interact with us by doing any of the following:

What Personal Data We Collect

When attempt to contact us or make a purchase, we collect the following types of Personal Data:

This includes:

Account Information such as your name, email address, and password

Automated technologies or interactions: As you interact with our website, we may automatically collect the following types of data (all as described above): Device Data about your equipment, Usage Data about your browsing actions and patterns, and Contact Data where tasks carried out via our website remain uncompleted, such as incomplete orders or abandoned baskets. We collect this data by using cookies, server logs and other similar technologies. Please see our Cookie section (below) for further details.

If you provide us, or our service providers, with any Personal Data relating to other individuals, you represent that you have the authority to do so and acknowledge that it will be used in accordance with this Policy. If you believe that your Personal Data has been provided to us improperly, or to otherwise exercise your rights relating to your Personal Data, please contact us by using the information set out in the “Contact us” section below.

When you visit a Scry Analytics, Inc. website, we automatically collect and store information about your visit using browser cookies (files which are sent by us to your computer), or similar technology. You can instruct your browser to refuse all cookies or to indicate when a cookie is being sent. The Help Feature on most browsers will provide information on how to accept cookies, disable cookies or to notify you when receiving a new cookie. If you do not accept cookies, you may not be able to use some features of our Service and we recommend that you leave them turned on.

We also process information when you use our services and products. This information may include:

We may receive your Personal Data from third parties such as companies subscribing to Scry Analytics, Inc. services, partners and other sources. This Personal Data is not collected by us but by a third party and is subject to the relevant third party’s own separate privacy and data collection policies. We do not have any control or input on how your Personal Data is handled by third parties. As always, you have the right to review and rectify this information. If you have any questions you should first contact the relevant third party for further information about your Personal Data.

Our websites and services may contain links to other websites, applications and services maintained by third parties. The information practices of such other services, or of social media networks that host our branded social media pages, are governed by third parties’ privacy statements, which you should review to better understand those third parties’ privacy practices.

We collect and use your Personal Data with your consent to provide, maintain, and develop our products and services and understand how to improve them.

These purposes include:

Where we process your Personal Data to provide a product or service, we do so because it is necessary to perform contractual obligations. All of the above processing is necessary in our legitimate interests to provide products and services and to maintain our relationship with you and to protect our business for example against fraud. Consent will be required to initiate services with you. New consent will be required if any changes are made to the type of data collected. Within our contract, if you fail to provide consent, some services may not be available to you.

Where possible, we store and process data on servers within the general geographical region where you reside (note: this may not be within the country in which you reside). Your Personal Data may also be transferred to, and maintained on, servers residing outside of your state, province, country or other governmental jurisdiction where the data laws may differ from those in your jurisdiction. We will take appropriate steps to ensure that your Personal Data is treated securely and in accordance with this Policy as well as applicable data protection law.Data may be kept in other countries that are considered adequate under your laws.

We will share your Personal Data with third parties only in the ways set out in this Policy or set out at the point when the Personal Data is collected.

We also use Google Analytics to help us understand how our customers use the site. You can read more about how Google uses your Personal Data here: Google Privacy Policy

You can also opt-out of Google Analytics here: https://tools.google.com/dlpage/gaoptout

We may use or disclose your Personal Data in order to comply with a legal obligation, in connection with a request from a public or government authority, or in connection with court or tribunal proceedings, to prevent loss of life or injury, or to protect our rights or property. Where possible and practical to do so, we will tell you in advance of such disclosure.

We may use a third party service provider, independent contractors, agencies, or consultants to deliver and help us improve our products and services. We may share your Personal Data with marketing agencies, database service providers, backup and disaster recovery service providers, email service providers and others but only to maintain and improve our products and services. For further information on the recipients of your Personal Data, please contact us by using the information in the “Contacting us” section below.

A cookie is a small file with information that your browser stores on your device. Information in this file is typically shared with the owner of the site in addition to potential partners and third parties to that business. The collection of this information may be used in the function of the site and/or to improve your experience.

To give you the best experience possible, we use the following types of cookies: Strictly Necessary. As a web application, we require certain necessary cookies to run our service.

We use preference cookies to help us remember the way you like to use our service. Some cookies are used to personalize content and present you with a tailored experience. For example, location could be used to give you services and offers in your area. Analytics. We collect analytics about the types of people who visit our site to improve our service and product.

So long as the cookie is not strictly necessary, you may opt in or out of cookie use at any time. To alter the way in which we collect information from you, visit our Cookie Manager.

A cookie is a small file with information that your browser stores on your device. Information in this file is typically shared with the owner of the site in addition to potential partners and third parties to that business. The collection of this information may be used in the function of the site and/or to improve your experience.

So long as the cookie is not strictly necessary, you may opt in or out of cookie use at any time. To alter the way in which we collect information from you, visit our Cookie Manager.

We will only retain your Personal Data for as long as necessary for the purpose for which that data was collected and to the extent required by applicable law. When we no longer need Personal Data, we will remove it from our systems and/or take steps to anonymize it.

If we are involved in a merger, acquisition or asset sale, your personal information may be transferred. We will provide notice before your personal information is transferred and becomes subject to a different Privacy Policy. Under certain circumstances, we may be required to disclose your personal information if required to do so by law or in response to valid requests by public authorities (e.g. a court or a government agency).

We have appropriate organizational safeguards and security measures in place to protect your Personal Data from being accidentally lost, used or accessed in an unauthorized way, altered or disclosed. The communication between your browser and our website uses a secure encrypted connection wherever your Personal Data is involved. We require any third party who is contracted to process your Personal Data on our behalf to have security measures in place to protect your data and to treat such data in accordance with the law. In the unfortunate event of a Personal Data breach, we will notify you and any applicable regulator when we are legally required to do so.

We do not knowingly collect Personal Data from children under the age of 18 Years.

Depending on your geographical location and citizenship, your rights are subject to local data privacy regulations. These rights may include:

Right to Access (PIPEDA, GDPR Article 15, CCPA/CPRA, CPA, VCDPA, CTDPA, UCPA, LGPD, POPIA)

You have the right to learn whether we are processing your Personal Data and to request a copy of the Personal Data we are processing about you.

Right to Rectification (PIPEDA, GDPR Article 16, CPRA, CPA, VCDPA, CTDPA, LGPD, POPIA)

You have the right to have incomplete or inaccurate Personal Data that we process about you rectified.

Right to be Forgotten (right to erasure) (GDPR Article 17, CCPA/CPRA, CPA, VCDPA, CTDPA, UCPA, LGPD, POPIA)

You have the right to request that we delete Personal Data that we process about you, unless we need to retain such data in order to comply with a legal obligation or to establish, exercise or defend legal claims.

Right to Restriction of Processing (GDPR Article 18, LGPD)

You have the right to restrict our processing of your Personal Data under certain circumstances. In this case, we will not process your Data for any purpose other than storing it.

Right to Portability (PIPEDA, GDPR Article 20, LGPD)

You have the right to obtain Personal Data we hold about you, in a structured, electronic format, and to transmit such Personal Data to another data controller, where this is (a) Personal Data which you have provided to us, and (b) if we are processing that data on the basis of your consent or to perform a contract with you or the third party that subscribes to services.

Right to Opt Out (CPRA, CPA, VCDPA, CTDPA, UCPA)

You have the right to opt out of the processing of your Personal Data for purposes of: (1) Targeted advertising; (2) The sale of Personal Data; and/or (3) Profiling in furtherance of decisions that produce legal or similarly significant effects concerning you. Under CPRA, you have the right to opt out of the sharing of your Personal Data to third parties and our use and disclosure of your Sensitive Personal Data to uses necessary to provide the products and services reasonably expected by you.

Right to Objection (GDPR Article 21, LGPD, POPIA)

Where the legal justification for our processing of your Personal Data is our legitimate interest, you have the right to object to such processing on grounds relating to your particular situation. We will abide by your request unless we have compelling legitimate grounds for processing which override your interests and rights, or if we need to continue to process the Personal Data for the establishment, exercise or defense of a legal claim.

Nondiscrimination and nonretaliation (CCPA/CPRA, CPA, VCDPA, CTDPA, UCPA)

You have the right not to be denied service or have an altered experience for exercising your rights.

File an Appeal (CPA, VCDPA, CTDPA)

You have the right to file an appeal based on our response to you exercising any of these rights. In the event you disagree with how we resolved the appeal, you have the right to contact the attorney general located here:

If you are based in Colorado, please visit this website to file a complaint. If you are based in Virginia, please visit this website to file a complaint. If you are based in Connecticut, please visit this website to file a complaint.

File a Complaint (GDPR Article 77, LGPD, POPIA)

You have the right to bring a claim before their competent data protection authority. If you are based in the EEA, please visit this website (http://ec.europa.eu/newsroom/article29/document.cfm?action=display&doc_id=50061) for a list of local data protection authorities.

If you have consented to our processing of your Personal Data, you have the right to withdraw your consent at any time, free of charge, such as where you wish to opt out from marketing messages that you receive from us. If you wish to withdraw your consent, please contact us using the information found at the bottom of this page.

You can make a request to exercise any of these rights in relation to your Personal Data by sending the request to our privacy team by using the form below.

For your own privacy and security, at our discretion, we may require you to prove your identity before providing the requested information.

We may modify this Policy at any time. If we make changes to this Policy then we will post an updated version of this Policy at this website. When using our services, you will be asked to review and accept our Privacy Policy. In this manner, we may record your acceptance and notify you of any future changes to this Policy.

To request a copy for your information, unsubscribe from our email list, request for your data to be deleted, or ask a question about your data privacy, we've made the process simple:

Our aim is to keep this Agreement as readable as possible, but in some cases for legal reasons, some of the language is required "legalese".

These terms of service are entered into by and between You and Scry Analytics, Inc., ("Company," "we," "our," or "us"). The following terms and conditions, together with any documents they expressly incorporate by reference (collectively "Terms of Service"), govern your access to and use of www.scryai.com, including any content, functionality, and services offered on or through www.scryai.com (the "Website").

Please read the Terms of Service carefully before you start to use the Website.

By using the Website [or by clicking to accept or agree to the Terms of Service when this option is made available to you], you accept and agree to be bound and abide by these Terms of Service and our Privacy Policy, found at Privacy Policy, incorporated herein by reference. If you do not want to agree to these Terms of Service, you must not access or use the Website.

Accept and agree to be bound and comply with these terms of service. You represent and warrant that you are the legal age of majority under applicable law to form a binding contract with us and, you agree if you access the website from a jurisdiction where it is not permitted, you do so at your own risk.

We may revise and update these Terms of Service from time to time in our sole discretion. All changes are effective immediately when we post them and apply to all access to and use of the Website thereafter.

Continuing to use the Website following the posting of revised Terms of Service means that you accept and agree to the changes. You are expected to check this page each time you access this Website so you are aware of any changes, as they are binding on you.

You are required to ensure that all persons who access the Website are aware of this Agreement and comply with it. It is a condition of your use of the Website that all the information you provide on the Website is correct, current, and complete.

You are solely and entirely responsible for your use of the website and your computer, internet and data security.

You may use the Website only for lawful purposes and in accordance with these Terms of Service. You agree not to use the Website:

The Website and its entire contents, features, and functionality (including but not limited to all information, software, text, displays, images, video, and audio, and the design, selection, and arrangement thereof) are owned by the Company, its licensors, or other providers of such material and are protected by United States and international copyright, trademark, patent, trade secret, and other intellectual property or proprietary rights laws.

These Terms of Service permit you to use the Website for your personal, non-commercial use only. You must not reproduce, distribute, modify, create derivative works of, publicly display, publicly perform, republish, download, store, or transmit any of the material on our Website, except as follows:

You must not access or use for any commercial purposes any part of the website or any services or materials available through the Website.

If you print, copy, modify, download, or otherwise use or provide any other person with access to any part of the Website in breach of the Terms of Service, your right to use the Website will stop immediately and you must, at our option, return or destroy any copies of the materials you have made. No right, title, or interest in or to the Website or any content on the Website is transferred to you, and all rights not expressly granted are reserved by the Company. Any use of the Website not expressly permitted by these Terms of Service is a breach of these Terms of Service and may violate copyright, trademark, and other laws.

The Website may provide you with the opportunity to create, submit, post, display, transmit, public, distribute, or broadcast content and materials to us or in the Website, including but not limited to text, writings, video, audio, photographs, graphics, comments, ratings, reviews, feedback, or personal information or other material (collectively, "Content"). You are responsible for your use of the Website and for any content you provide, including compliance with applicable laws, rules, and regulations.

All User Submissions must comply with the Submission Standards and Prohibited Activities set out in these Terms of Service.

Any User Submissions you post to the Website will be considered non-confidential and non-proprietary. By submitting, posting, or displaying content on or through the Website, you grant us a worldwide, non-exclusive, royalty-free license to use, copy, reproduce, process, disclose, adapt, modify, publish, transmit, display and distribute such Content for any purpose, commercial advertising, or otherwise, and to prepare derivative works of, or incorporate in other works, such as Content, and grant and authorize sublicenses of the foregoing. The use and distribution may occur in any media format and through any media channels.

We do not assert any ownership over your Content. You retain full ownership of all of your Content and any intellectual property rights or other proprietary rights associated with your Content. We are not liable for any statement or representations in your Content provided by you in any area in the Website. You are solely responsible for your Content related to the Website and you expressly agree to exonerate us from any and all responsibility and to refrain from any legal action against us regarding your Content. We are not responsible or liable to any third party for the content or accuracy of any User Submissions posted by you or any other user of the Website. User Submissions are not endorsed by us and do not necessarily represent our opinions or the view of any of our affiliates or partners. We do not assume liability for any User Submission or for any claims, liabilities, or losses resulting from any review.

We have the right, in our sole and absolute discretion, (1) to edit, redact, or otherwise change any Content; (2) to recategorize any Content to place them in more appropriate locations in the Website; and (3) to prescreen or delete any Content at any time and for any reason, without notice. We have no obligation to monitor your Content. Any use of the Website in violation of these Terms of Service may result in, among other things, termination or suspension of your right to use the Website.

These Submission Standards apply to any and all User Submissions. User Submissions must in their entirety comply with all the applicable federal, state, local, and international laws and regulations. Without limiting the foregoing, User Submissions must not:

We have the right, without provision of notice to:

You waive and hold harmless company and its parent, subsidiaries, affiliates, and their respective directors, officers, employees, agents, service providers, contractors, licensors, licensees, suppliers, and successors from any and all claims resulting from any action taken by the company and any of the foregoing parties relating to any, investigations by either the company or by law enforcement authorities.

For your convenience, this Website may provide links or pointers to third-party sites or third-party content. We make no representations about any other websites or third-party content that may be accessed from this Website. If you choose to access any such sites, you do so at your own risk. We have no control over the third-party content or any such third-party sites and accept no responsibility for such sites or for any loss or damage that may arise from your use of them. You are subject to any terms and conditions of such third-party sites.

This Website may provide certain social media features that enable you to:

You may use these features solely as they are provided by us and solely with respect to the content they are displayed with. Subject to the foregoing, you must not:

The Website from which you are linking, or on which you make certain content accessible, must comply in all respects with the Submission Standards set out in these Terms of Service.

You agree to cooperate with us in causing any unauthorized framing or linking immediately to stop.

We reserve the right to withdraw linking permission without notice.

We may disable all or any social media features and any links at any time without notice in our discretion.

You understand and agree that your use of the website, its content, and any goods, digital products, services, information or items found or attained through the website is at your own risk. The website, its content, and any goods, services, digital products, information or items found or attained through the website are provided on an "as is" and "as available" basis, without any warranties or conditions of any kind, either express or implied including, but not limited to, the implied warranties of merchantability, fitness for a particular purpose, or non-infringement. The foregoing does not affect any warranties that cannot be excluded or limited under applicable law.

You acknowledge and agree that company or its respective directors, officers, employees, agents, service providers, contractors, licensors, licensees, suppliers, or successors make no warranty, representation, or endorsement with respect to the completeness, security, reliability, suitability, accuracy, currency, or availability of the website or its contents or that any goods, services, digital products, information or items found or attained through the website will be accurate, reliable, error-free, or uninterrupted, that defects will be corrected, that our website or the server that makes it available or content are free of viruses or other harmful components or destructive code.

Except where such exclusions are prohibited by law, in no event shall the company nor its respective directors, officers, employees, agents, service providers, contractors, licensors, licensees, suppliers, or successors be liable under these terms of service to you or any third-party for any consequential, indirect, incidental, exemplary, special, or punitive damages whatsoever, including any damages for business interruption, loss of use, data, revenue or profit, cost of capital, loss of business opportunity, loss of goodwill, whether arising out of breach of contract, tort (including negligence), any other theory of liability, or otherwise, regardless of whether such damages were foreseeable and whether or not the company was advised of the possibility of such damages.

To the maximum extent permitted by applicable law, you agree to defend, indemnify, and hold harmless Company, its parent, subsidiaries, affiliates, and their respective directors, officers, employees, agents, service providers, contractors, licensors, suppliers, successors, and assigns from and against any claims, liabilities, damages, judgments, awards, losses, costs, expenses, or fees (including reasonable attorneys' fees) arising out of or relating to your breach of these Terms of Service or your use of the Website including, but not limited to, third-party sites and content, any use of the Website's content and services other than as expressly authorized in these Terms of Service or any use of any goods, digital products and information purchased from this Website.

At Company’s sole discretion, it may require you to submit any disputes arising from these Terms of Service or use of the Website, including disputes arising from or concerning their interpretation, violation, invalidity, non-performance, or termination, to final and binding arbitration under the Rules of Arbitration of the American Arbitration Association applying Ontario law. (If multiple jurisdictions, under applicable laws).

Any cause of action or claim you may have arising out of or relating to these terms of use or the website must be commenced within 1 year(s) after the cause of action accrues; otherwise, such cause of action or claim is permanently barred.

Your provision of personal information through the Website is governed by our privacy policy located at the "Privacy Policy".

The Website and these Terms of Service will be governed by and construed in accordance with the laws of the Province of Ontario and any applicable federal laws applicable therein, without giving effect to any choice or conflict of law provision, principle, or rule and notwithstanding your domicile, residence, or physical location. Any action or proceeding arising out of or relating to this Website and/or under these Terms of Service will be instituted in the courts of the Province of Ontario, and each party irrevocably submits to the exclusive jurisdiction of such courts in any such action or proceeding. You waive any and all objections to the exercise of jurisdiction over you by such courts and to the venue of such courts.

If you are a citizen of any European Union country or Switzerland, Norway or Iceland, the governing law and forum shall be the laws and courts of your usual place of residence.

The parties agree that the United Nations Convention on Contracts for the International Sale of Goods will not govern these Terms of Service or the rights and obligations of the parties under these Terms of Service.

If any provision of these Terms of Service is illegal or unenforceable under applicable law, the remainder of the provision will be amended to achieve as closely as possible the effect of the original term and all other provisions of these Terms of Service will continue in full force and effect.

These Terms of Service constitute the entire and only Terms of Service between the parties in relation to its subject matter and replaces and extinguishes all prior or simultaneous Terms of Services, undertakings, arrangements, understandings or statements of any nature made by the parties or any of them whether oral or written (and, if written, whether or not in draft form) with respect to such subject matter. Each of the parties acknowledges that they are not relying on any statements, warranties or representations given or made by any of them in relation to the subject matter of these Terms of Service, save those expressly set out in these Terms of Service, and that they shall have no rights or remedies with respect to such subject matter otherwise than under these Terms of Service save to the extent that they arise out of the fraud or fraudulent misrepresentation of another party. No variation of these Terms of Service shall be effective unless it is in writing and signed by or on behalf of Company.

No failure to exercise, and no delay in exercising, on the part of either party, any right or any power hereunder shall operate as a waiver thereof, nor shall any single or partial exercise of any right or power hereunder preclude further exercise of that or any other right hereunder.

We may provide any notice to you under these Terms of Service by: (i) sending a message to the email address you provide to us and consent to us using; or (ii) by posting to the Website. Notices sent by email will be effective when we send the email and notices we provide by posting will be effective upon posting. It is your responsibility to keep your email address current.

To give us notice under these Terms of Service, you must contact us as follows: (i) by personal delivery, overnight courier or registered or certified mail to Scry Analytics Inc. 2635 North 1st Street, Suite 200 San Jose, CA 95134, USA. We may update the address for notices to us by posting a notice on this Website. Notices provided by personal delivery will be effective immediately once personally received by an authorized representative of Company. Notices provided by overnight courier or registered or certified mail will be effective once received and where confirmation has been provided to evidence the receipt of the notice.

To request a copy for your information, unsubscribe from our email list, request for your data to be deleted, or ask a question about your data privacy, we've made the process simple: